At City Innovation Labs we focus on applying the methodologies of lean startup to help innovation teams bring their ideas to life. One of the core principles of the lean startup methodology is to start small and make small iterations based on user feedback.

Search is a common feature in many products today. The time to build and deploy a fully realized search feature can take weeks. If your product already uses Postgres, the time to build a search feature can go from weeks to hours by using Postgres full text search features. That will ultimately help you launch your product faster. Once you identify that search is a feature that users want, you can build a fully-realized search feature using proven technologies like Elastic Search or Algolia. This post focuses on how to use the Postgres full text search (FTS) features to get your MVP out as soon as possible.

Most relational databases offer very simple text search functions like LIKE, ILIKE, SIMILAR ~, ~* and regexp_matches. However, there are some limitations to those functions that the full text search functionality overcomes. Postgres supports stemming words (i.e. race and racing will have a common stem), removing stop words (words that mean nothing when you are searching such as, ie, the, a, or to), and ranking. There are other cool features that are worth checking out in the official documentation.

This post will use the dataset from the Project Gutenburg index as an example database to search. By the end of this post, we’ll be able to look up books by title, description, author, and category.

Project Gutenburg offers its index available as a pile of RDF/ XML files. I wrote a script to take that file and dump it into a PostgreSQL database. You can download the Postgres dump here.

Using Postgres Full Text Search

Preparing the document

This example has a few tables that are used in the search. Here’s what the schema looks like:

CREATE TABLE books(

id TEXT,

title TEXT,

alternative TEXT,

language TEXT,

table_of_contents TEXT,

issued DATE,

downloads INT,

PRIMARY KEY (id)

);

CREATE TABLE creators(

id TEXT,

name TEXT,

webpage TEXT,

death_date TEXT,

birth_date TEXT,

PRIMARY KEY(id)

);

CREATE TABLE books_creators (

book_id TEXT REFERENCES books(id),

creator_id TEXT REFERENCES creators(id),

PRIMARY KEY (book_id, creator_id)

);

CREATE TABLE subjects(

id SERIAL,

value TEXT,

kind TEXT,

PRIMARY KEY(id)

);

CREATE TABLE books_subjects (

book_id TEXT REFERENCES books(id),

subject_id INT REFERENCES subjects(id),

PRIMARY KEY (book_id, subject_id)

);

CREATE TABLE files(

extant TEXT,

mime_type TEXT,

uri TEXT,

modified TIMESTAMP,

book_id TEXT REFERENCES books(id),

PRIMARY KEY(uri)

);We do not use all the fields on the tables when searching. Some are only used for informational purposes. The files table links to the files hosted by Project Gutenburg and won’t be used in the search. The first part of the search is to build up a document that can be indexed and searched. This document will include everything that a user may want to search on.

Initial Query

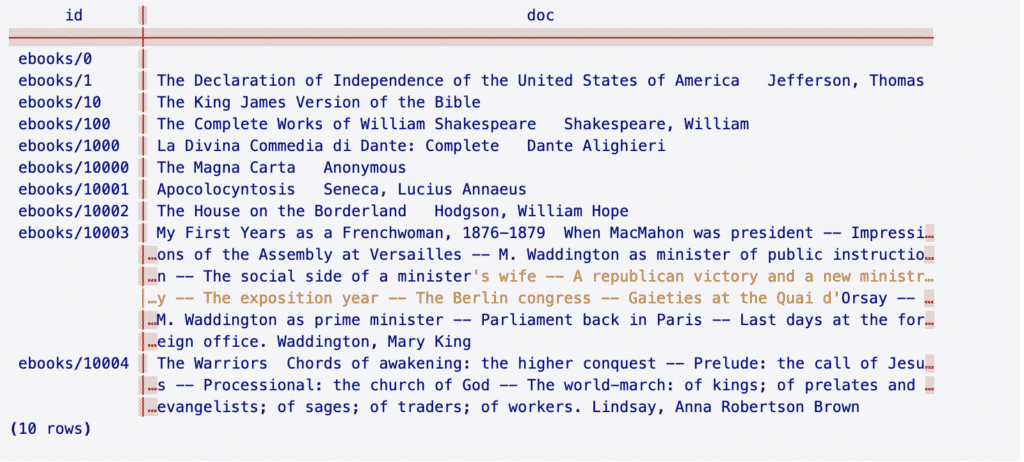

Below is an initial query. This sets up the search fields with all the necessary data. The query limits the results to ten rows because it is a test search.

SELECT books.id,

COALESCE(books.title, '') || ' ' ||

COALESCE(books.alternative, '') || ' ' ||

COALESCE(books.table_of_contents, '') || ' ' ||

COALESCE(creators.name, '') doc

FROM books

LEFT JOIN books_creators ON (books_creators.book_id = books.id)

LEFT JOIN creators ON (books_creators.creator_id = creators.id)

LIMIT 10;

Some of the fields may be null so the COALESCE function is used to default null to an empty text value. Otherwise, the entire document would have null value. The subjects table has some interesting data that users may want to include in the search, but we’ll include that at a different time.

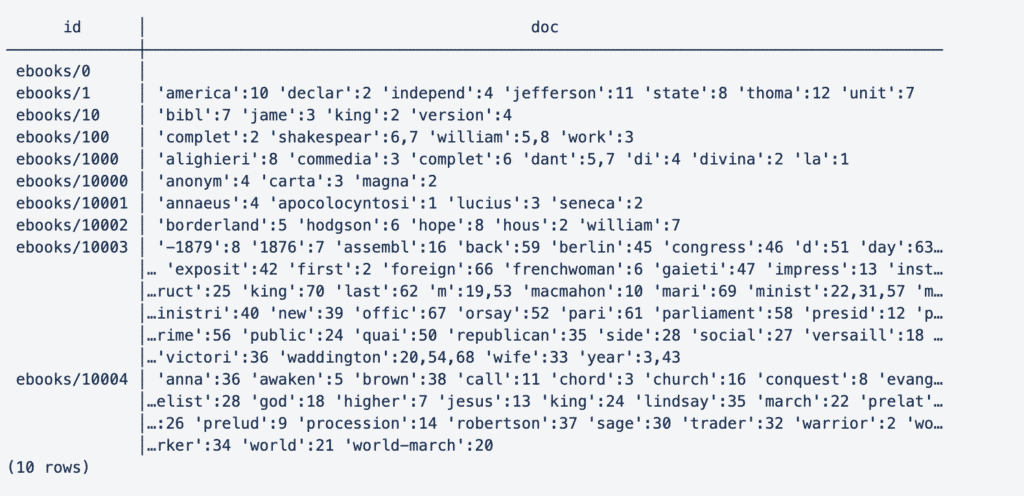

Now that the initial document is defined, it needs to be preprocessed into a special vector that has the words stemmed, the stop words removed, and the words ranked. (We’ll get to ranking later in the article). The data type is called a tsvector. The function that turns a document into a tsvector is to_tsvector.

SELECT books.id,

to_tsvector(COALESCE(books.title, '') || ' ' ||

COALESCE(books.alternative, '') || ' ' ||

COALESCE(books.table_of_contents, '') || ' ' ||

COALESCE(creators.name, '')) doc

FROM books

LEFT JOIN books_creators ON (books_creators.book_id = books.id)

LEFT JOIN creators ON (books_creators.creator_id = creators.id)

LIMIT 10;

In this output, the words are stemmed to a normalized token and the order of the word is to the right. In ebooks/10003 the last token “year” has 3,43 by it because it appears twice in the document.

Project Gutenburg has over 60,000 books. Generating the vectors on the fly for every search will be slow. For this example, utilize a materialized view to store the vectors. This method was chosen because the project is more read-heavy than write-heavy. A few books per day are contributed. They don’t have to show up in the index right away., It’s perfectly fine if there’s a nightly job that pulls in new books and refreshes the view.

For projects that are write-heavy, instead of using a materialized view, you may want to consider using a separate table that is either updated by the application or by triggers whenever data is inserted, updated, or deleted. You could also use another column on the data you want preprocessed. In this case, I recommend keeping the search data in a separate table since it’s pulled together from different tables.

Updated Query: Materialized View

CREATE MATERIALIZED VIEW simple_search AS

SELECT books.id,

to_tsvector(COALESCE(books.title, '') || ' ' ||

COALESCE(books.alternative, '') || ' ' ||

COALESCE(books.table_of_contents, '') || ' ' ||

COALESCE(creators.name, '')) doc

FROM books

LEFT JOIN books_creators ON (books_creators.book_id = books.id)

LEFT JOIN creators ON (books_creators.creator_id = creators.id);The documents can be preprocessed because the limits have been removed.

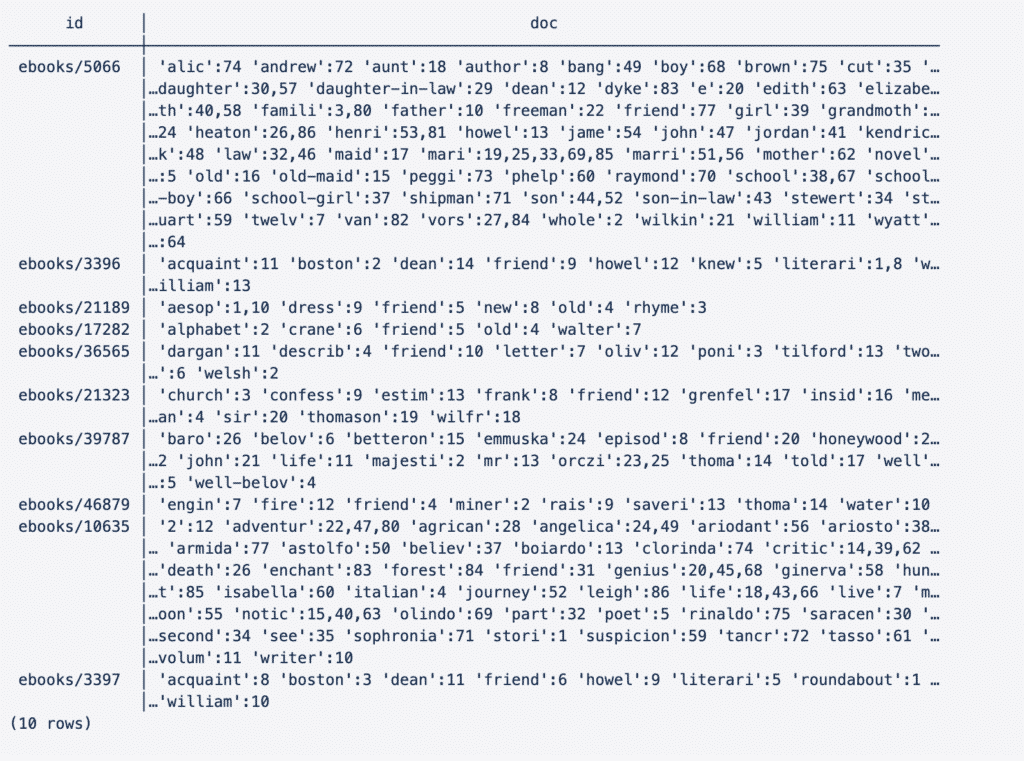

Searching the Document

Searching is easier because the document has been preprocessed and is in a materialized view. Later we’ll add an index on it to speed it up even more. Time to search the newly created vectors.

First, we’ll search for documents that have the word friend in them:

SELECT *

FROM simple_search

WHERE simple_search.doc @@ tsquery('friend')

LIMIT 10;

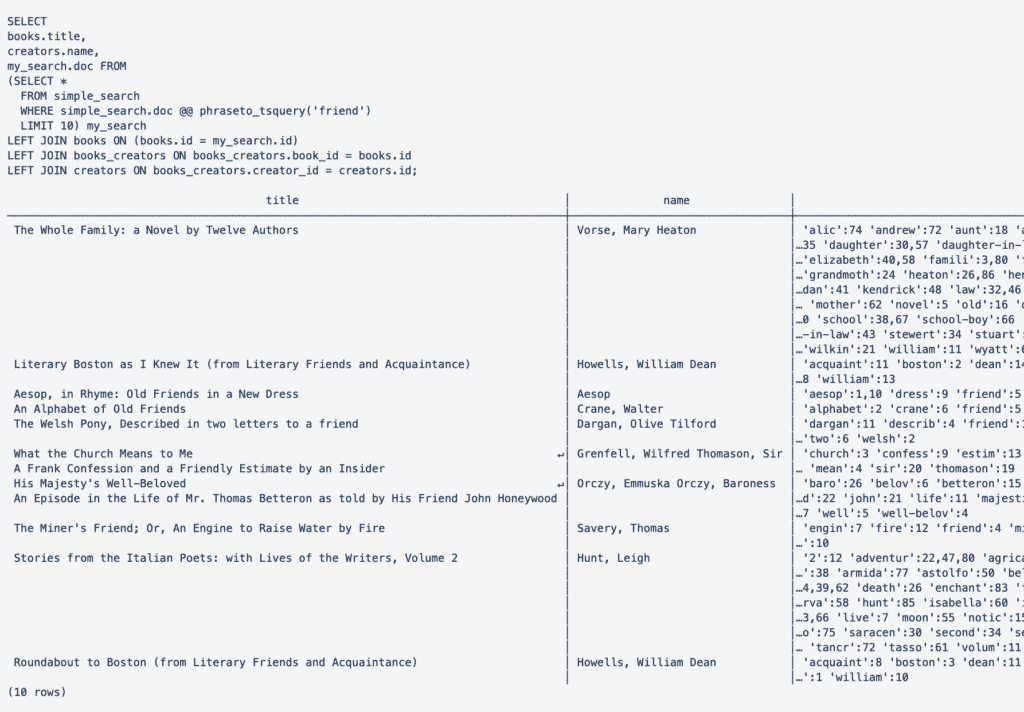

This is great, but this is not what users would want to see. Listing the title of the book and the author would be helpful to see what books were found. Updating the query to reflect the change is necessary. See the new query below.

SELECT

books.title,

creators.name,

my_search.doc FROM

(SELECT *

FROM simple_search

WHERE simple_search.doc @@ phraseto_tsquery('friend')

LIMIT 10) my_search

LEFT JOIN books ON (books.id = my_search.id)

LEFT JOIN books_creators ON books_creators.book_id = books.id

LEFT JOIN creators ON books_creators.creator_id = creators.id;

That is more informative. Notice that we select from a subquery that has a limit on it. This is so the books and creators are not joined on the entire search table.

Here are some useful functions for searching:

to_tsqueryplainto_tsqueryphraseto_tsquery

to_tsquery is the more powerful of the three because it supports some pretty nifty operators:

- & (AND)

- | (OR)

- ! (NOT)

- <-> (FOLLOWED BY)

If we were exposing this to the web to be searched, I would recommend using plainto_tsquery. It takes the words you pass to its and searches for the document that contains all the tokens. plainto_tsquery('war peace') is equivalent to to_tsquery('war & peace'). It looks for documents that have words similar to war and peace.

If I were building a typeahead though, I would want to use to_tsquery. I would remove any of the operators, parentheses, and other special characters from the search so the query wouldn’t throw an error. Then I would join the words together with the & operator and append to the last word :*. Then christmas ca would be turned into christmas & ca:*.

It’s worth checking out the documentation to see more examples of these operators in action.

Search Ranking

It’s important that the search show the most relevant results at the top. PostgreSQL’s ranking functions allow for this. The ts_rank function works by ranking results higher if the tokens in the query vector occur more frequently in the document vector. Here’s an example query:

SELECT

my_search.rank,

books.title,

creators.name,

my_search.doc,

query

FROM (SELECT

simple_search.id,

simple_search.doc,

query,

ts_rank(simple_search.doc, query) rank

FROM simple_search, to_tsquery('christmas & ca:*') AS query

WHERE query @@ simple_search.doc

ORDER BY rank DESC

LIMIT 50) my_search

LEFT JOIN books ON (books.id = my_search.id)

LEFT JOIN books_creators ON books_creators.book_id = books.id

LEFT JOIN creators ON books_creators.creator_id = creators.idThe documents say that ranking is expensive, however, this query returned 30,000 documents in 50 ms in 50 ms for 30,000 documents. That’s even without adding an index on the document as well.

Before moving on let’s add two indexes on the search view. The first is on the id. The materialized view is updated concurrently. The other will be on the document which should speed up the searches.

CREATE INDEX idx_fts_search ON simple_search USING gin(doc); CREATE UNIQUE INDEX idx_unq_search ON simple_search (id);That sped up the search. Now the results are returning in about 10 ms.

Using Weights to Better Rank Results

Using weight for certain items in a document helps ranking results. For example, the title of the book should be more relevant than the table of contents. Also, there’s the subjects table we could add to the document. This gives some more text to the document to help with searching. However, the subject is the lowest-weighted item. If a person were to search history, books with that in the title would show up first, then books with that in the subject would show up last. How items are weighted in the document is very application-specific.

Different weight categories are available to add to the document with PostgreSQL: A,B.C, and D.

Here’s a query to create a ranked document as a materialized view to search.

CREATE MATERIALIZED VIEW ranked_search AS

SELECT books.id,

setweight(to_tsvector(COALESCE(books.title, '') || ' ' || COALESCE(books.alternative, '')), 'A') ||

setweight(to_tsvector(COALESCE(creators.name, '')), 'B') ||

setweight(to_tsvector(COALESCE(books.table_of_contents, '')), 'C') ||

setweight(to_tsvector(COALESCE(subject_groups.subject_values, '')), 'D') AS doc

FROM books

LEFT JOIN books_creators ON (books_creators.book_id = books.id)

LEFT JOIN creators ON (books_creators.creator_id = creators.id)

LEFT JOIN (SELECT

books_subjects.book_id,

string_agg(subjects.value , ' ') subject_values

FROM subjects

LEFT JOIN books_subjects ON (books_subjects.subject_id = subjects.id)

GROUP BY books_subjects.book_id) subject_groups

ON (subject_groups.book_id = books.id);We’ll also index it:

CREATE UNIQUE INDEX idx_unq_search_ranked ON ranked_search (id);CREATE INDEX idx_fts_search ON ranked_search USING gin(doc);This can be searched liked the last view, except the view names need to be changed. See below:

SELECT

my_search.rank,

books.title,

creators.name,

my_search.doc,

query

FROM (SELECT

simple_search.id,

simple_search.doc,

query,

ts_rank(simple_search.doc, query) rank

FROM simple_search, to_tsquery('christmas & ca:*') AS query

WHERE query @@ ranked_search.doc

ORDER BY rank DESC

LIMIT 50) my_search

LEFT JOIN books ON (books.id = my_search.id)

LEFT JOIN books_creators ON books_creators.book_id = books.id

LEFT JOIN creators ON books_creators.creator_id = creators.idThis should be enough to get started to build a search feature with Postgres in English.

When Not to Use Postgres Full Text Search

Postgres works great to get implement an MVP of search in a project. However, as the project grows, you may find that your users may need more than what Postgres FTS has to offer. Here are some signs that it may be time to switch to something else like Elastic Search, Sphinx, or Algolia.

- If you need massive scale. From the research I’ve done, people have had success with Postgres FTS with over 20 million documents. But there may come a point where your application will outgrow what Postgres can offer. At that point, you may want to look for something else.

- If you need support for multiple languages. Postgres’ full text search only supports a handful of languages well. From the research I’ve done, Elastic search/Solr supports multiple languages much better.

- If you need fine-grain control over the search ranking. Postgres offers a few ranking algorithms out of the box., However, if you need something outside of those, you may want to look elsewhere. The Postgres documentation says you write your own ranking algorithms, though, they need to be written in C. Other products offer more flexible ranking algorithms.

What’s Next?

For my next post, I plan on creating a web front end for the search results. I’ll show how to create a typeahead in 3 different front end languages using this dataset as a back end. We’ll look at React (JavaScript), Angular (TypeScript), and Reagent/Reframe (ClojureScript).

Further Reading on Postgres Full Text Search

- The official PostgreSQL documentation

- http://rachbelaid.com/postgres-full-text-search-is-good-enough/

- http://web.archive.org/web/20190418012835/http://www.shisaa.jp/postset/postgresql-full-text-search-part-1.html

- http://web.archive.org/web/20190417191152/http://shisaa.jp/postset/postgresql-full-text-search-part-2.html

- http://web.archive.org/web/20190418012731/http://shisaa.jp/postset/postgresql-full-text-search-part-3.html